Citrix recently (17.12.2019) released an advisory warning of a critical vulnerability in all Citrix ADC and Gateway platforms. Late Friday (10.1.2020) multiple working exploits were posted for everyone to be accessible. Here are some tipps on how to identify whether your device is compromised.

Changelog

- 14. Jan 2020: Added additional path for XML evidence (@dpisa007)

- 14. Jan 2020: Added crontab for user nobody

- 14. Jan 2020: Added bash logs (@joernrusch)

- 14. Jan 2020: Some additional attacks seen in the wild

- 14. Jan 2020: Added apache error logs (@joernrusch)

- 14. Jan 2020: Added “what to do if you are compromised” section (@_POPPELGAARD)

- 15. Jan 2020: Added link to SANS observed payloads list

First things first. The exploits allow anonymous remote code execution and allow unauthenticated attackers to take over the appliances with root privileges. I’ve tested several of the exploits myself and can confirm their functionality.

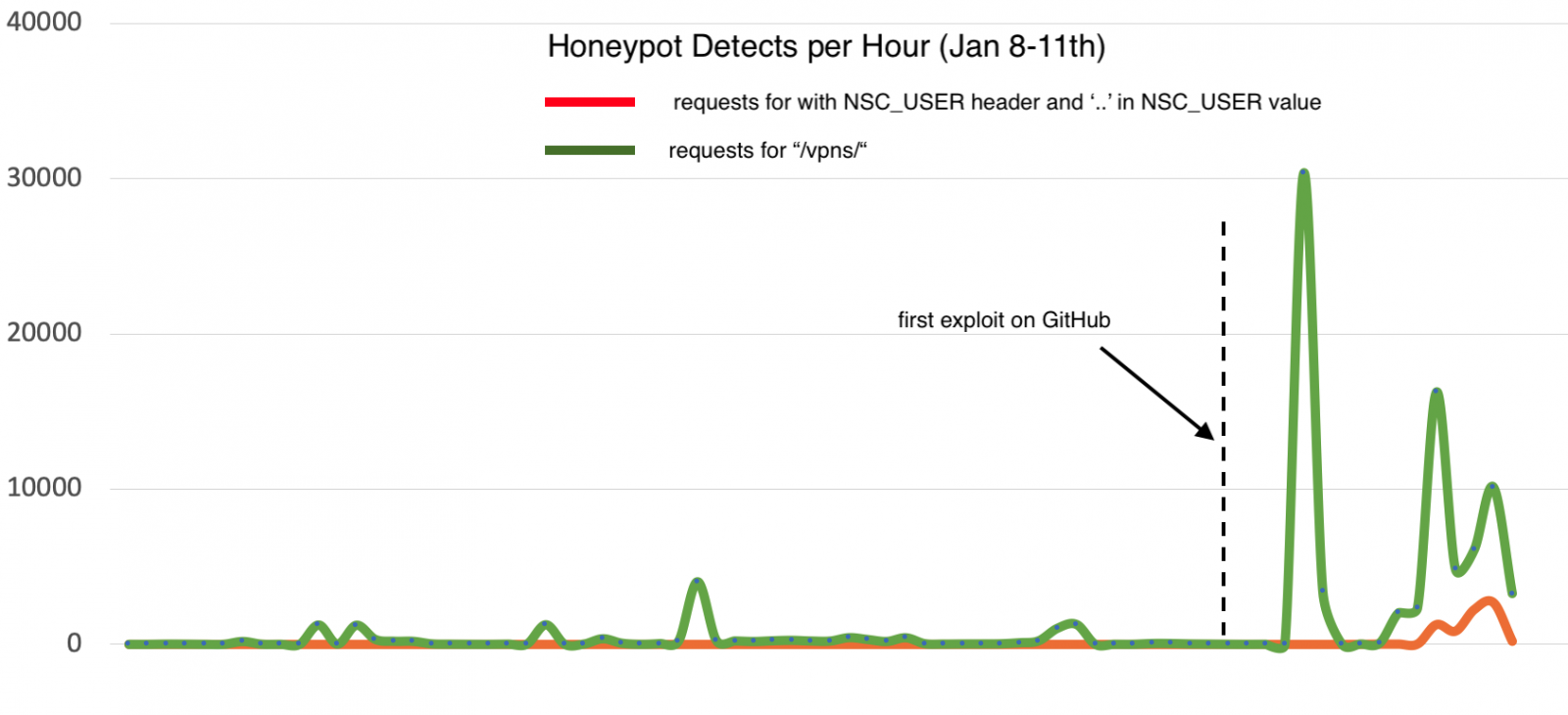

Only few hours later several security researches recognised a tremendous growth in attacks of their Citrix ADC honeypots

According to my current research all functional exploits are mitigated by the workaround published by Citrix alongside the advisory.

Even upfront researchers noticed massive scanning for vulnerable Citrix ADC devices to setup a catalog of potential targets for later use. Now those targets are getting actively attacked! Therefore, if you haven’t applied the workaround you should consider your Citrix ADC compromised!

But even systems with the mitigations in place should receive a in-depth review because you can never tell for sure at what time attacks might have started before they went broad.

Indicators of compromise

To get an idea wether your Citrix ADC is compromised I’d recommend to perform (at least!) the following steps

Template files

The exploits all write files to two different directories. Scan those via:

shell ls /netscaler/portal/templates/*.xml

shell ls /var/tmp/netscaler/portal/templates

shell ls /var/vpn/bookmark/*.xmlIf you find files similar to the following you are likely to be compromised

Apache Log files

In addition, attempts to exploit the system leave traces in the Apache httpaccess log files. Those you can validate via:

shell cat /var/log/httpaccess.log | grep vpns | grep xml

shell cat /var/log/httpaccess.log | grep "/\.\./"

shell gzcat /var/log/httpaccess.log.*.gz | grep vpns | grep xml

shell gzcat /var/log/httpaccess.log.*.gz | grep "/\.\./"The following output is found on a system that was exploited:

However, a guarantee can never been given as attackers also might clean up their traces of the initial exploitation. A few more things to validate are…

Cron jobs

Attackers have been observed to obtain persistent access via scheduled tasks (“cron jobs” in Linux/BSD) to maintain their access even if the vulnerability gets patched. Check your crontab file for anomalies:

shell cat /etc/crontab

shell crontab -l -u nobodyThe following is the output of a non-compromised system for you to compare:

Backdoor scripts

Running backdoors or other malicious tasks are often executed as Perl or Python scripts. Check for the presence of active running Perl or Python tasks:

shell ps -aux | grep python

shell ps -aux | grep perlIf you see more then the “grep” commands itself check the running scripts.

But beware, several Citrix ADC system-native tasks might appear as well. Some run scheduled so run the query again a few seconds later. Some are permanent (custom monitoring scripts for Storefront for example). Check those scripts to make sure they weren’t altered.

Crypto miners

Several attacks have been observed to install crypto miners. You can identifiy those by looking at the CPU intense processes by running:

shell top -n 10Should you see any other processes but NSPPE-xx displaying high CPU usage you might have found a crypto miner:

Bash logs

In addition to the apache logs, some payloads run bash commands which can be traced in the bash log files. Check for those via:

shell cat /var/log/bash.log | grep nobody

shell gzcat /var/log/bash.*.gz | grep nobodyAttackers will land with the rights of apache (which is “nobody”). So if they execute commands via bash – which a few of the payloads I’ve seen did – or if they spawn a remote shell they are likely to end up being logged here:

But beware, these logs rotate rather quickly (1-2 days) because ADC spams them with quite a bunch of messages each minute with its own scheduled tasks!

Apache error logs

A bit more tricky is the analysis of the apache error logs. These log failed execution attempts (and I’ve seen a few misspelled commands or syntax errors). You might be able to use the following filter to catch some events:

shell "cat /var/log/httperror.log | grep -B2 -A5 Traceback"

shell "gzcat /var/log/httperror.log.*.gz | grep -B2 -A5 Traceback"The following is a failed attempts at executing python because the path wasn’t referenced correctly:

But again, this might only be one type of error, ideally you may want to review them manually, especially if you’re in doubt:

shell cat /var/log/httperror.log

shell gzcat /var/log/httperror.log.*.gzFirewall

In addition to Citrix ADC local indicators observe your surrounding firewalls for any irregular traffic. Most likely attackers will use the Citrix ADC as a jump host to penetrate the network further.

Attacks in the wild

Unfortunately, beside issuing warnings to all my customers well in advance, I had to observe a few real world compromises. Luckily so far they all failed at some point – as far as I can tell.

I’ve observed the following behaviours in the wild so far:

- Retrieval of ns.conf

- Dropping of encoded Perl and CGI Backdoors

- Downloading of binary payloads which override the default httpd with a (likely) malicious version of apache

- Altering of crontab

- Retrieval of /etc/passwd

- Retrieval of the already present *.xml files

- Downloading of perl payloads and replacing existing Citrix ADC scripts

- Payloads that remove previously planted *.xml files and other artifacts

- Payloads that closed the door behind them by fixing newbm.pl after creating their own backdoor

Actually the SANS Institute published a very comprehensive list of payloads observed in the wild which covers most of the payloads I have seen as well, a good read if you want to dig deeper into what happens once a compromise took place: https://isc.sans.edu/diary/Citrix+ADC+Exploits%3A+Overview+of+Observed+Payloads/25704

What to do if you are compromised?

First thing you should do (after taking the appliance offline if possible!) is try to reverse engineer what the attacker did investigating the logs and files further. Here’s a sample of a simple ns.conf exfiltration via the template XML files for example:

But that’s a simple one, there are more complicated multi-encoded payloads and commands, scripts that download other scripts and much more.

Once you know what happened, you might be able to clean up after them. However, again, attackers might have performed several steps to hide their presence already. If you’re unsure about the state of your device or if you have been involved in a more complex/successful attack, your safest bet will be to (yes, painful, but…):

- Remove your Citrix ADC from the network

- Re-Image the Citrix ADC (both in HA) and re-import config

While relatively easy for VPX (You might even have a snapshot backup) its a slow process for MPX as you have to go through Citrix techsupport and might even need to get a new HDD with the clean image shipped - Investigate all servers the Citrix ADC has a connection to for further compromise

This might include the typical Citrix candidates like Storefront, etc. as well as DCs for LDAP but might also include a huge bunch of web- and application servers in a true ADC deployment - Change all ADC local accounts passwords

While stored hashed in the ns.conf those might be crackable - Change all ADC AD service accounts passwords

While stored encrypted in the ns.conf those might be crackable - Change all AD user account passwords that were using the ADC to login

All users? Yes, remember you are able to take wireshark traces on ADC including SSL session keys which can then be used to decrypt the whole communications – including user login credentials - Revoke and renew all SSL certificates

Private keys might have been downloaded. While private key passwords are stored encrypted in the ns.conf those might be crackable

…and I’ve probably forgot a few vectors, let me know and I’ll update the list!

Also thanks @_POPPELGAARD on the great discussion and valuable input on the topic!

Firmware updates

Citrix currently has no patch available – however, according to a recent blog post Citrix announced release dates for a permanent fix throughout January.

The announced release dates are:

| 13.0 | 27.1.2020 |

| 12.1 | 27.1.2020 |

| 12.0 | 20.1.2020 |

| 11.1 | 20.1.2020 |

| 10.5 | 31.1.2020 |

I recommend to schedule an update ASAP after release and monitor the official advisory closely!

I was able to disable features, I. E. the WAF.

LikeLike

What to do if a mpx was hacked? How to do a Hardware factory reset on the device?

LikeLike

As far as I know you have to go through Citrix support to either get a USB re-install image or a new HDD with a blank image – not confirmed though.

Definitly reach out to support if you need to reset a MPX! And feel free to share your experience for me to include in the list

LikeLike

https://docs.citrix.com/en-us/citrix-hardware-platforms/mpx/wiping-your-data-before-sending-your-adc-appliance-to-citrix.html you can do a factory reset

> shell

# cd /flash/.recovery

# sh rc.system_wipe_and_reset

and also you have to also start also a firmware udpate so it will create all files needed on var for example for the portal themes without you will get a white page for the vpn vserver

LikeLike

I did also this:

> shell

# cd /flash/.recovery

# sh rc.system_wipe_and_reset

But something went wrong

After that NS started in Developer mode and showed this message:

Recovery file flash.label on /var/.flash_tmp does not exist

Flash wipe failed!

Flash reinitialization failed!

LikeLike

Very good article that sums up the issues and mitigations – good job

LikeLiked by 1 person

I want to inform that we found a bug with the responder policy

Firmware: 12.1.50.28.nc

**Release Notes – Fixed …**

In a Citrix Gateway appliance, responder and rewrite policies bound to VPN virtual servers might not process the packets that matched the policy rules.

[From Build 51.19]

[# NSHELP-18311]

In a Citrix Gateway appliance, responder and rewrite policies bound to VPN virtual servers might not process the packets that matched the policy rules.

[From Build 50.31]

[# NSHELP-18311]

Firmware 12.1 55.13.nc will work

LikeLiked by 1 person

We have a bunch of xml files “changed” between 11-01-202 and 13-01-202 (where we patched), but none of the other logs shows sign. How do i verify the xml files are legit or we are compromised ?The xml files seems harmless but I’m not sure.

LikeLike

I’ve seen a few xml filed created without malicious content as well. However, the only legit XML which should be there should be named after users you know – these are where legit users store bookmarks if you use a gateway configuration which allows them to add bookmarks (Unified Gateway).

if there are others you had at least an attempt of an attack. But as long as there are no commands embedded in the XML you should be fine – unless they covered their traces otherwise which is hard to tell unfortunately.

LikeLike

Thanks, we ended up replacing the netscalers. Too many suspecious xml files.

LikeLike

The XML files are located in /netscaler/portal/templates/

LikeLike

Great articel!

Does someone know if some processes like

91629 nobody 1 44 0 196M 115M accept 0 0:00 0.00% httpd

are ok?

We see three to four processes running under nobody and with almost no cpu usage. We did not detect any other suspicious behavior or hints.

LikeLike

If you implemented the mitigating steps, do you feel it necessary to upgrade to the fixed firmware once released, asap?

LikeLike

Is it safe to just import ns.conf from a compromised device?

LikeLike

I haven’t seen any ns.confs being altered (yet). However, I would always quickly doublecheck it for any signs of misuse (added users, added load balancers pointing to internal systems not inted to be public, etc.). But these should be fairly easy to spot – if you’re familiar with the Netscaler CLI

LikeLike

Thanks Manuel. I ended up adding the config line by line just to be sure as it wasn’t very complex but haven’t actually found any signs of misuse either.

LikeLike

I have looked through a lot of ADC’s and have not spotted any signs of it being altered. But have checked them all before importing them on new appliances.

LikeLike

Thanks Brian, I didn’t see any malicious changes in the config file either.

LikeLike